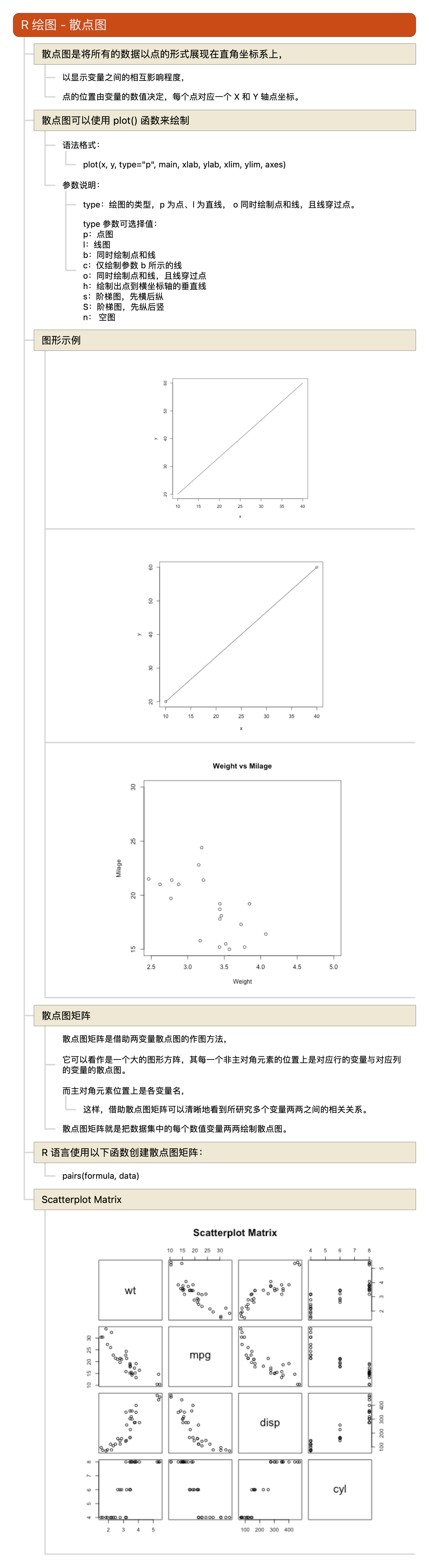

R语言教程-散点图

Posted on

In

multiple-programming-languages

,

R

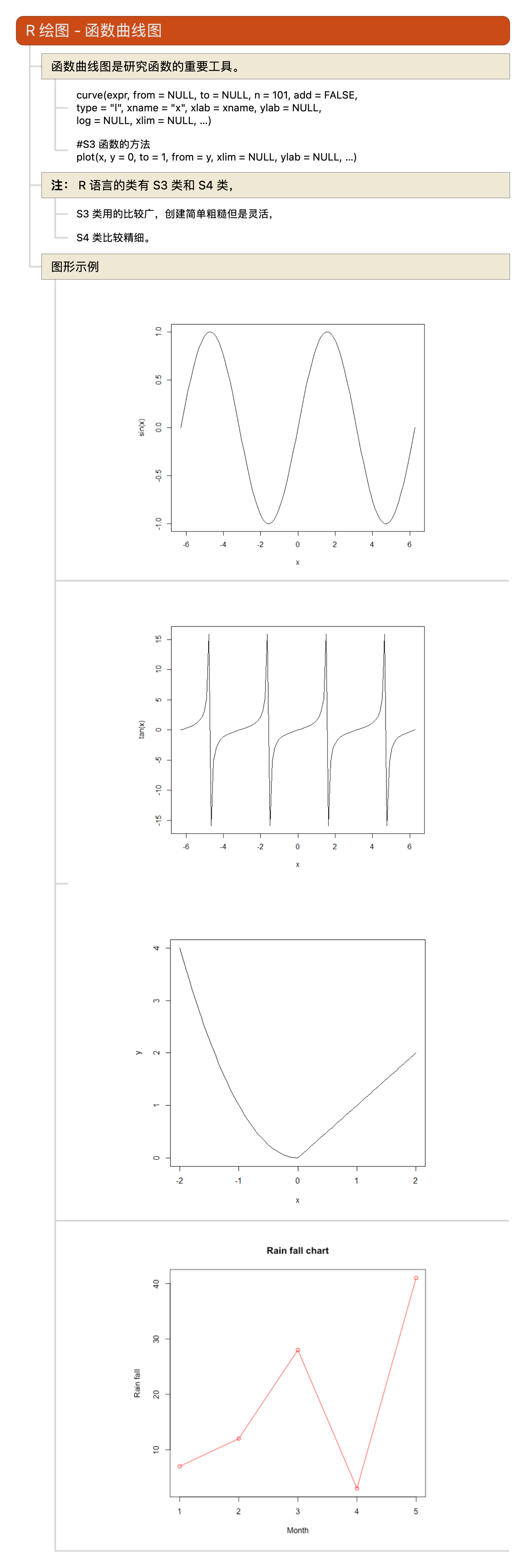

R语言教程-函数曲线图

Posted on

In

multiple-programming-languages

,

R

R语言教程-中文支持

Posted on

In

multiple-programming-languages

,

R

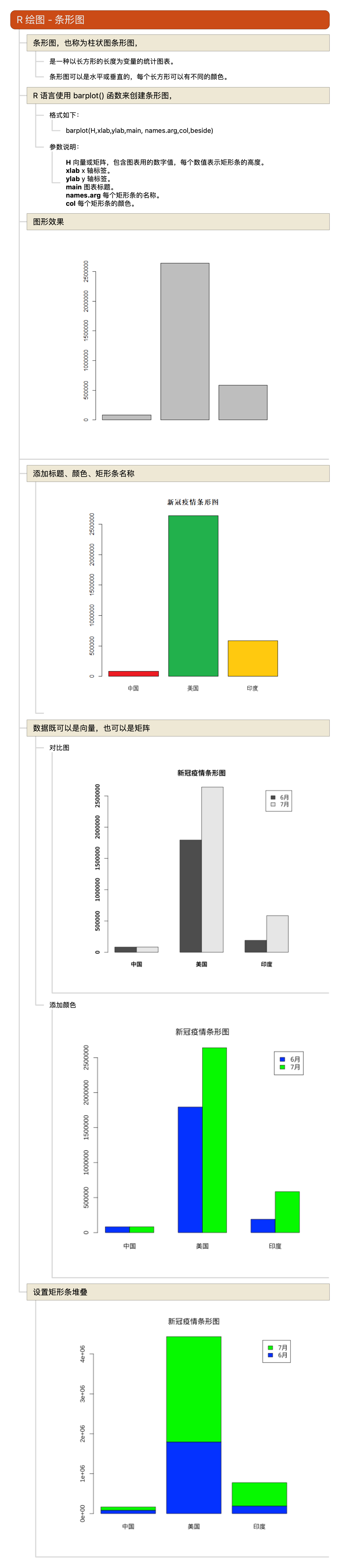

R语言教程-条形图

Posted on

In

multiple-programming-languages

,

R

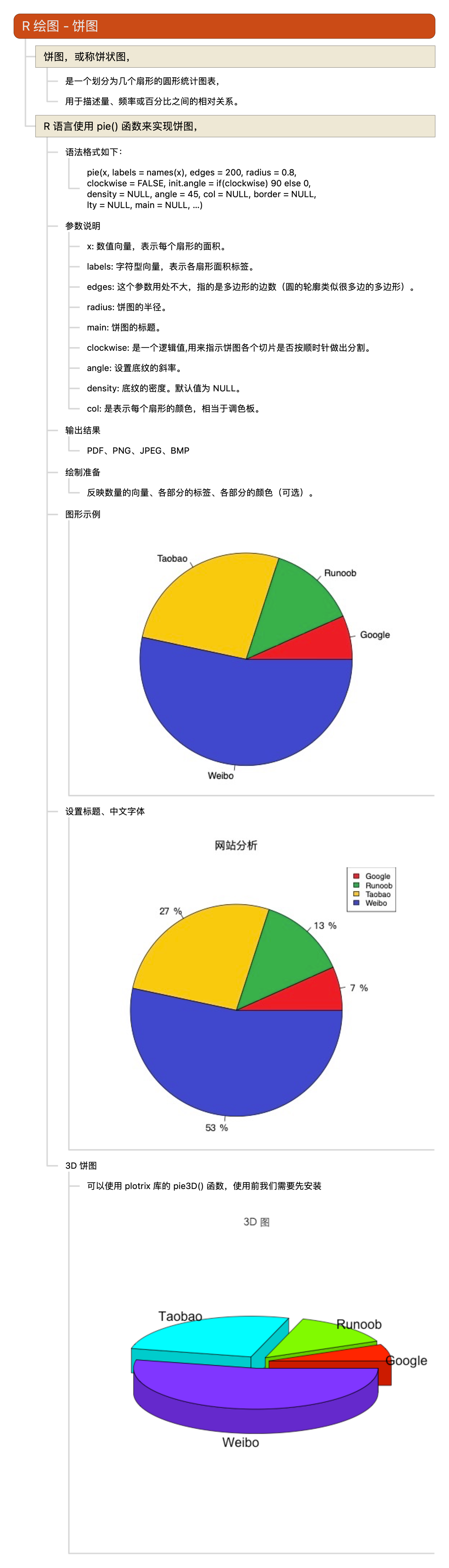

R语言教程-饼图

Posted on

In

multiple-programming-languages

,

R

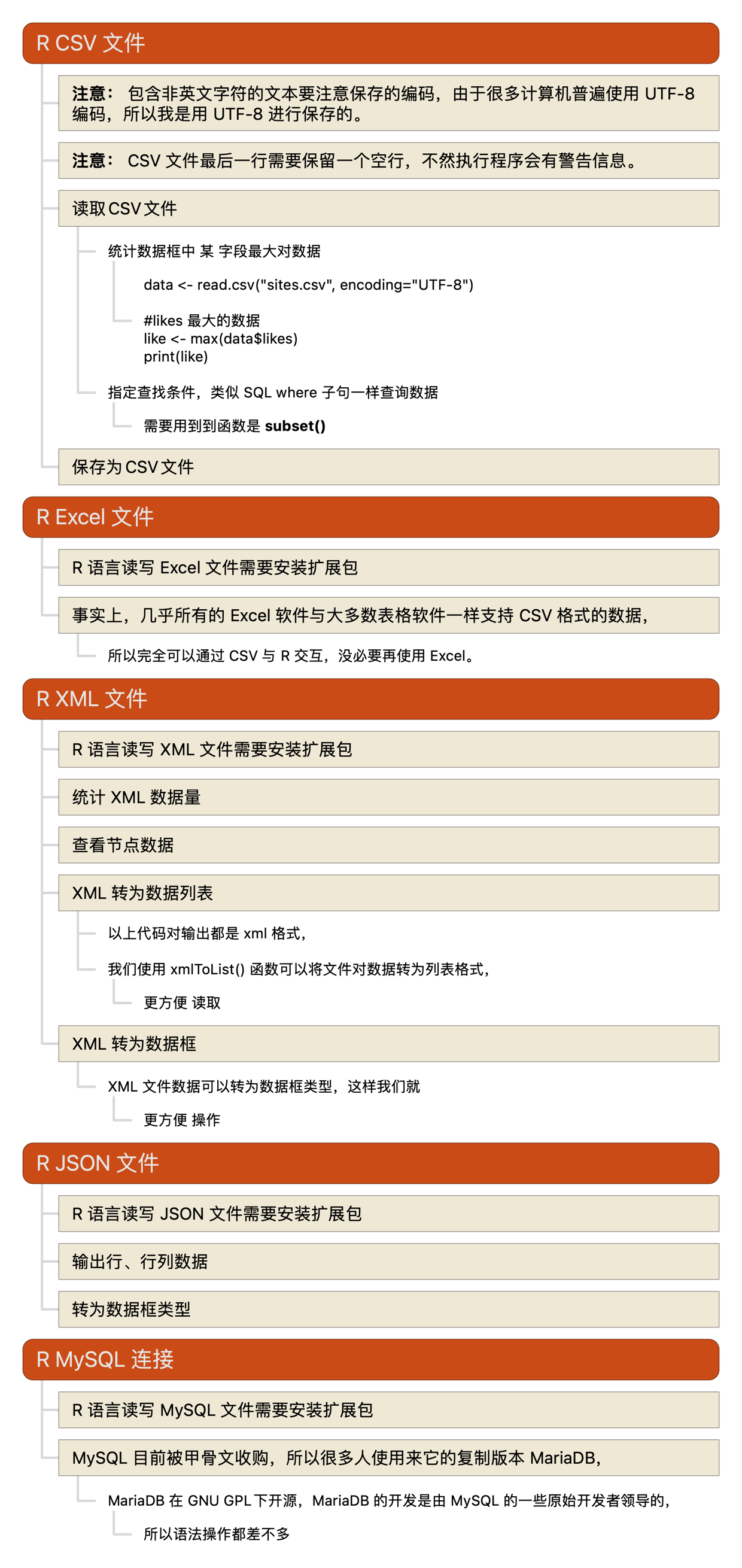

R语言教程-CSV、Excel、XML、JSON、MYSQL

Posted on

In

multiple-programming-languages

,

R

R语言教程-包

Posted on

In

multiple-programming-languages

,

R

R语言教程-数据重塑

Posted on

In

multiple-programming-languages

,

R

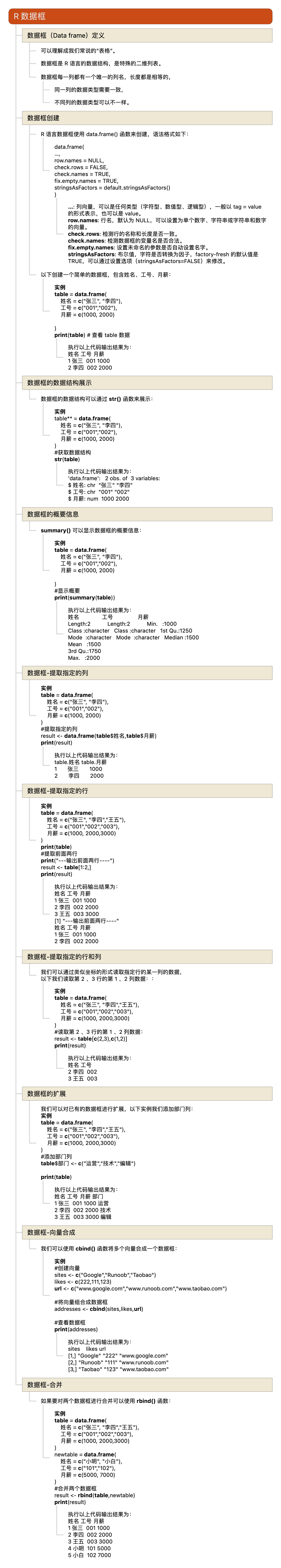

R语言教程-数据框

Posted on

In

multiple-programming-languages

,

R