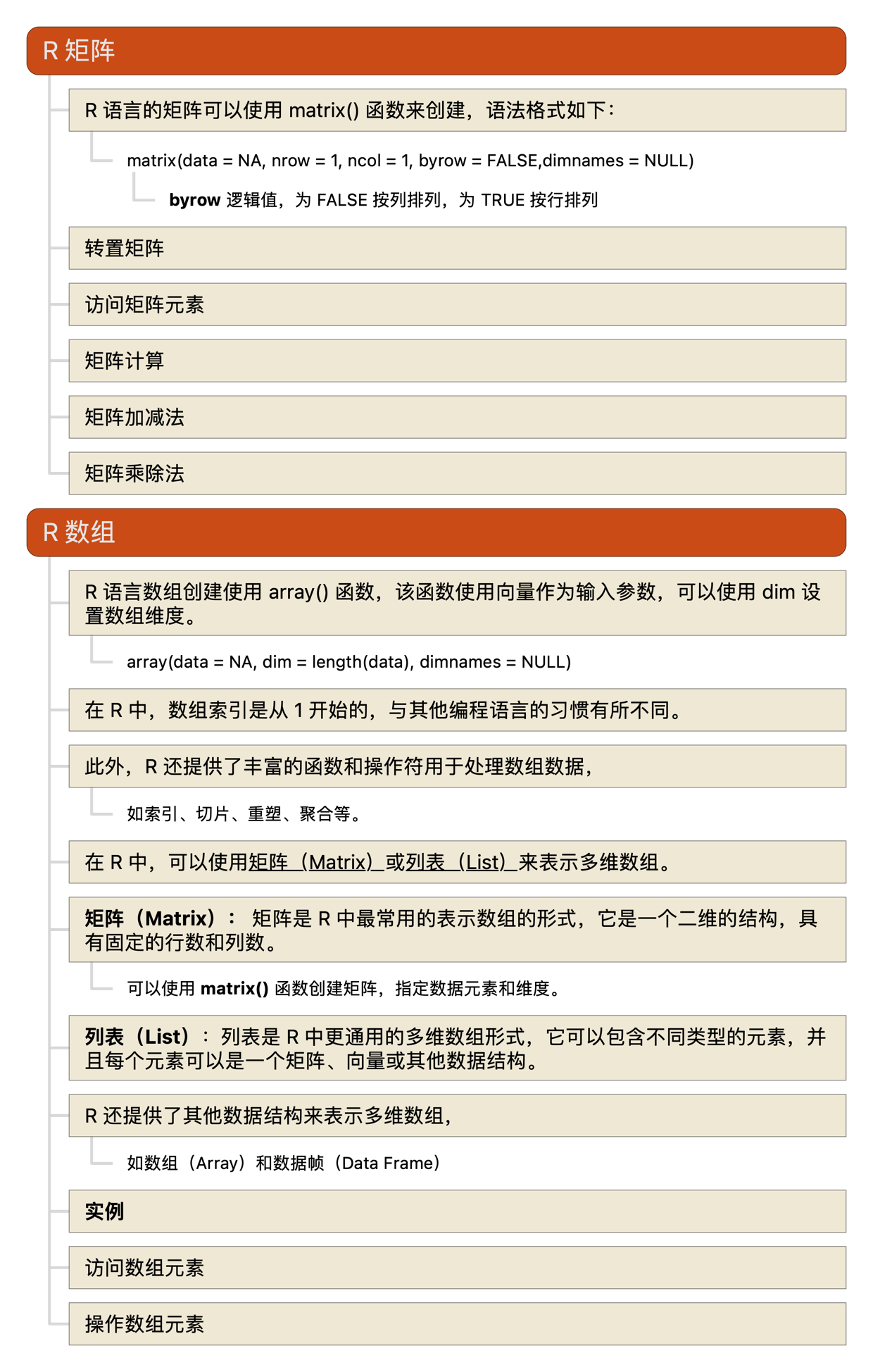

R语言教程-矩阵、数组

Posted on

In

multiple-programming-languages

,

R

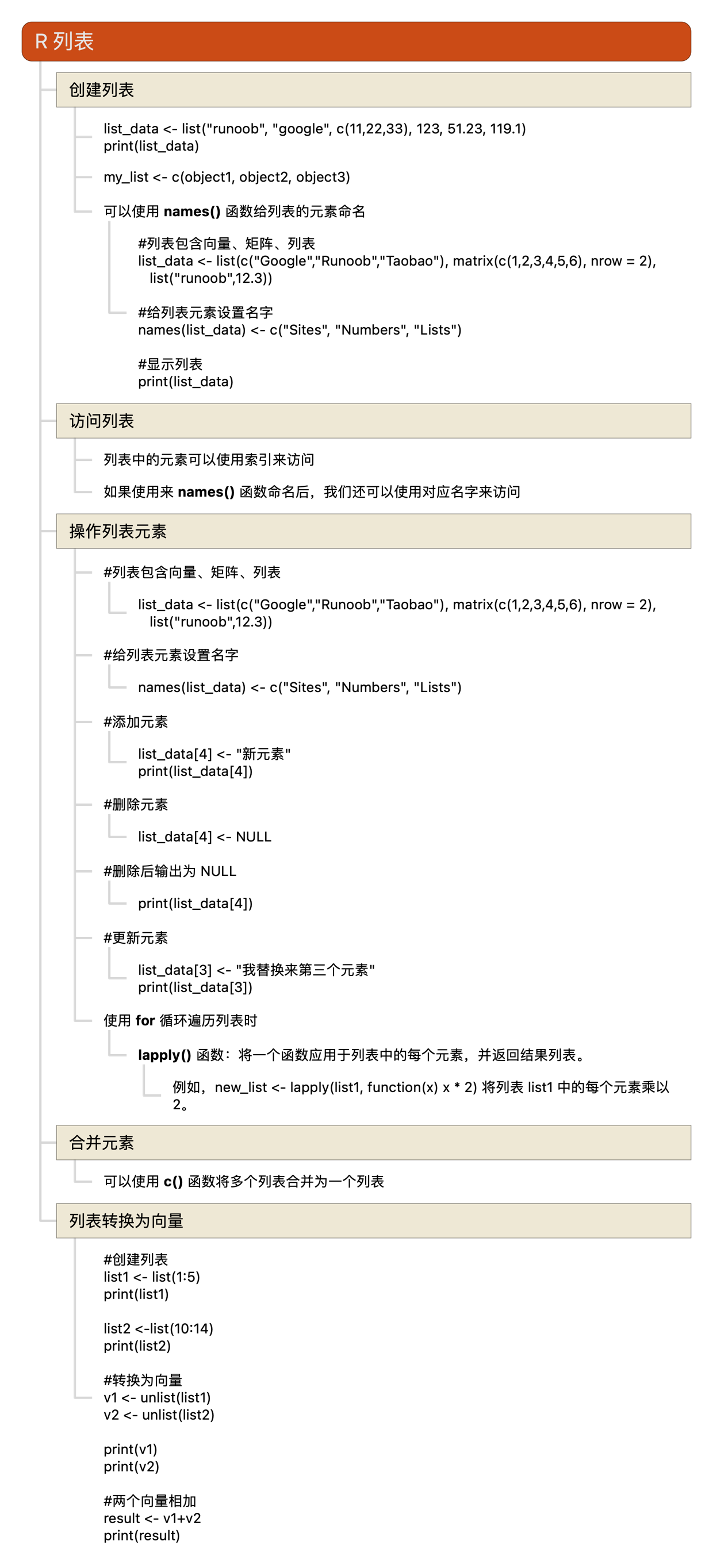

R语言教程-列表

Posted on

In

multiple-programming-languages

,

R

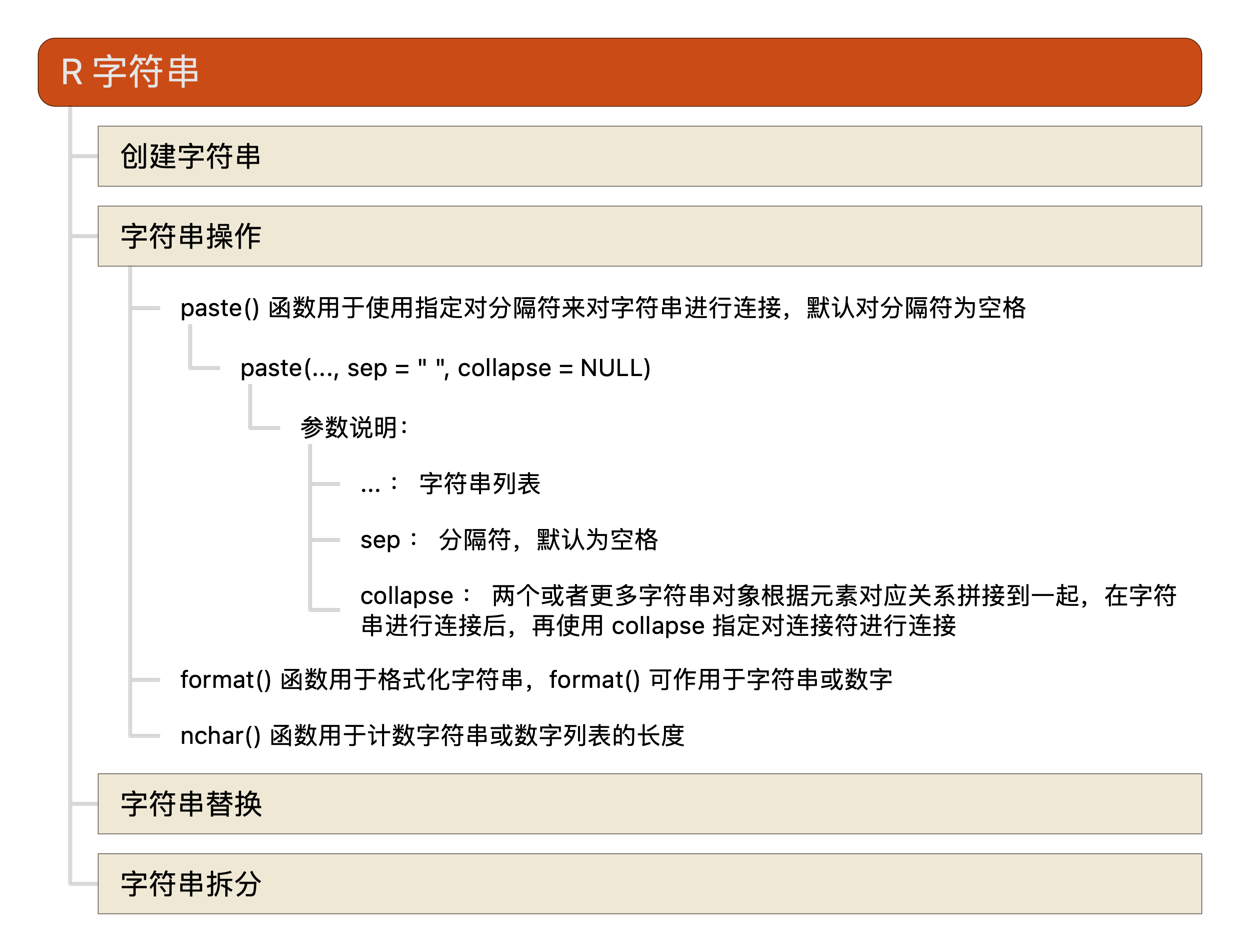

R语言教程-字符串

Posted on

In

multiple-programming-languages

,

R

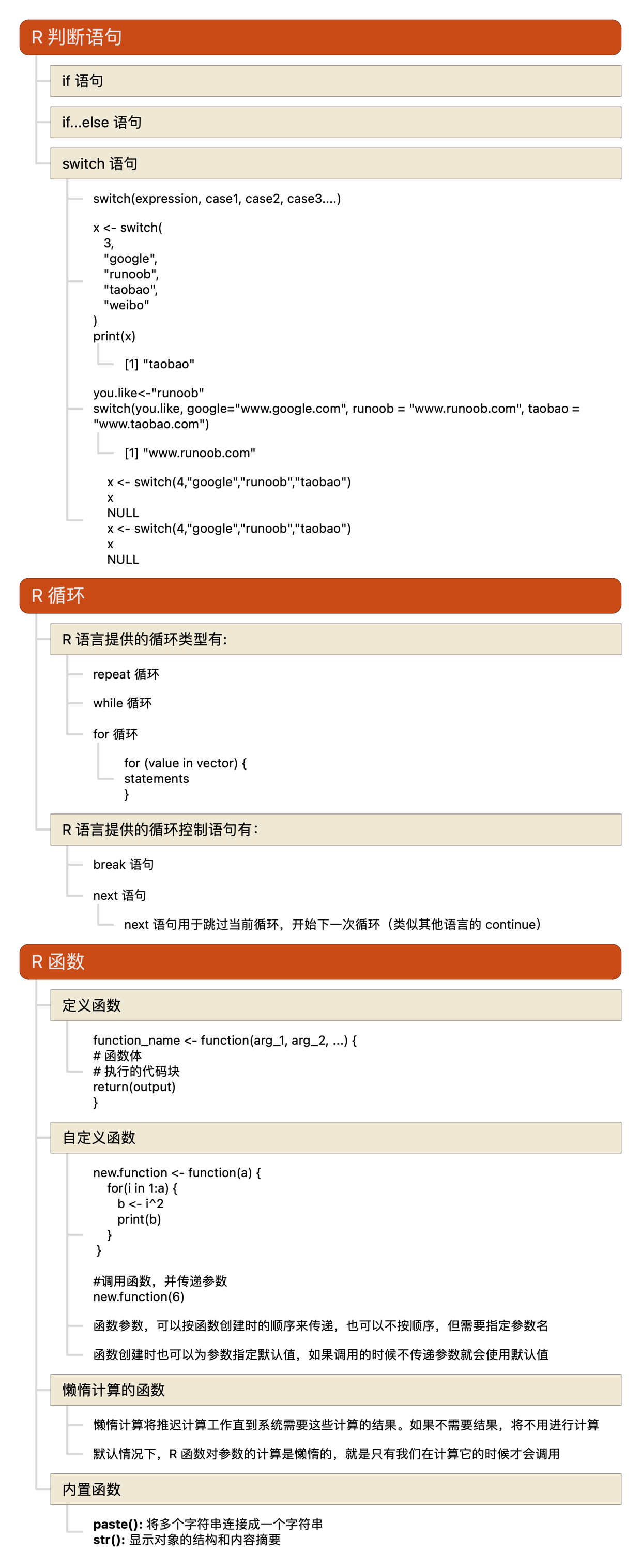

R语言教程-判断语句、循环、函数

Posted on

In

multiple-programming-languages

,

R

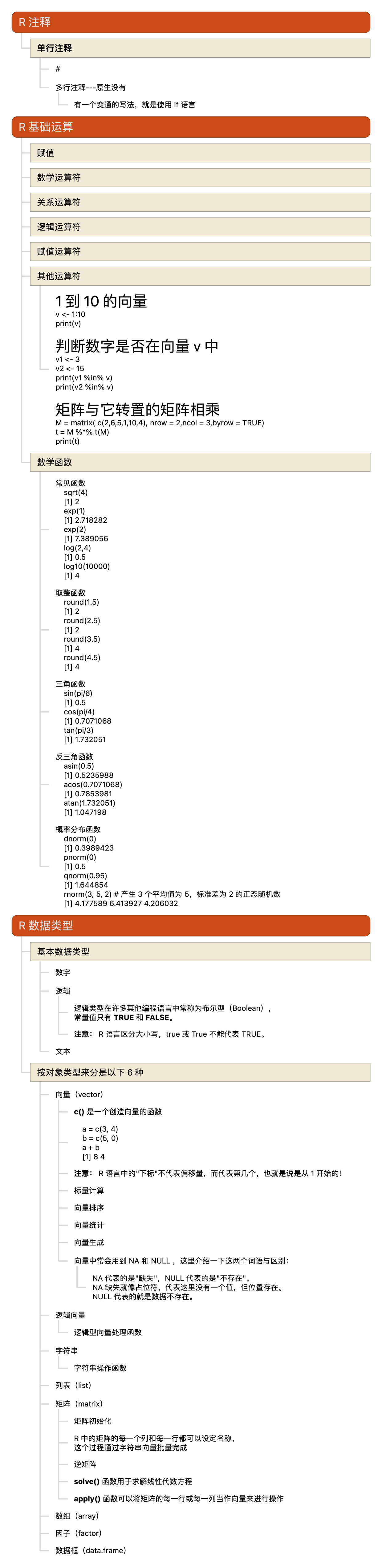

R语言教程-注释、基础运算、数据类型

Posted on

In

multiple-programming-languages

,

R

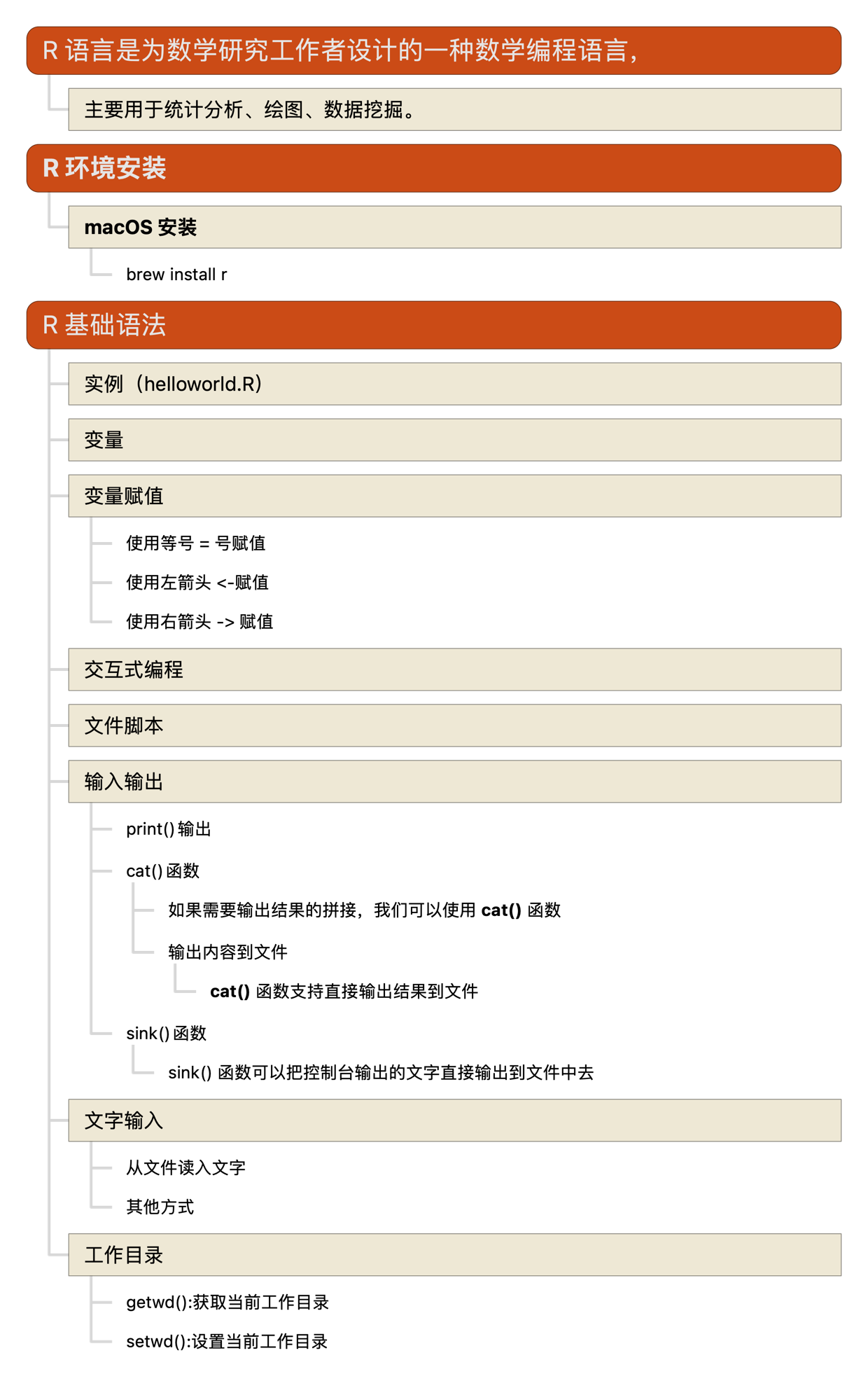

R语言教程-环境安装、基础语法

Posted on

In

multiple-programming-languages

,

R

Hello World

Posted on

Edited on

Welcome to Hexo! This is your very first post. Check documentation for more info. If you get any problems when using Hexo, you can find the answer in troubleshooting or you can ask me on GitHub.

Quick Start

Create a new post

1 | $ hexo new "My New Post" |

More info: Writing

Run server

1 | $ hexo server |

More info: Server

Generate static files

1 | $ hexo generate |

More info: Generating

Deploy to remote sites

1 | $ hexo deploy |

More info: Deployment

Test-Hexo

Posted on

Edited on

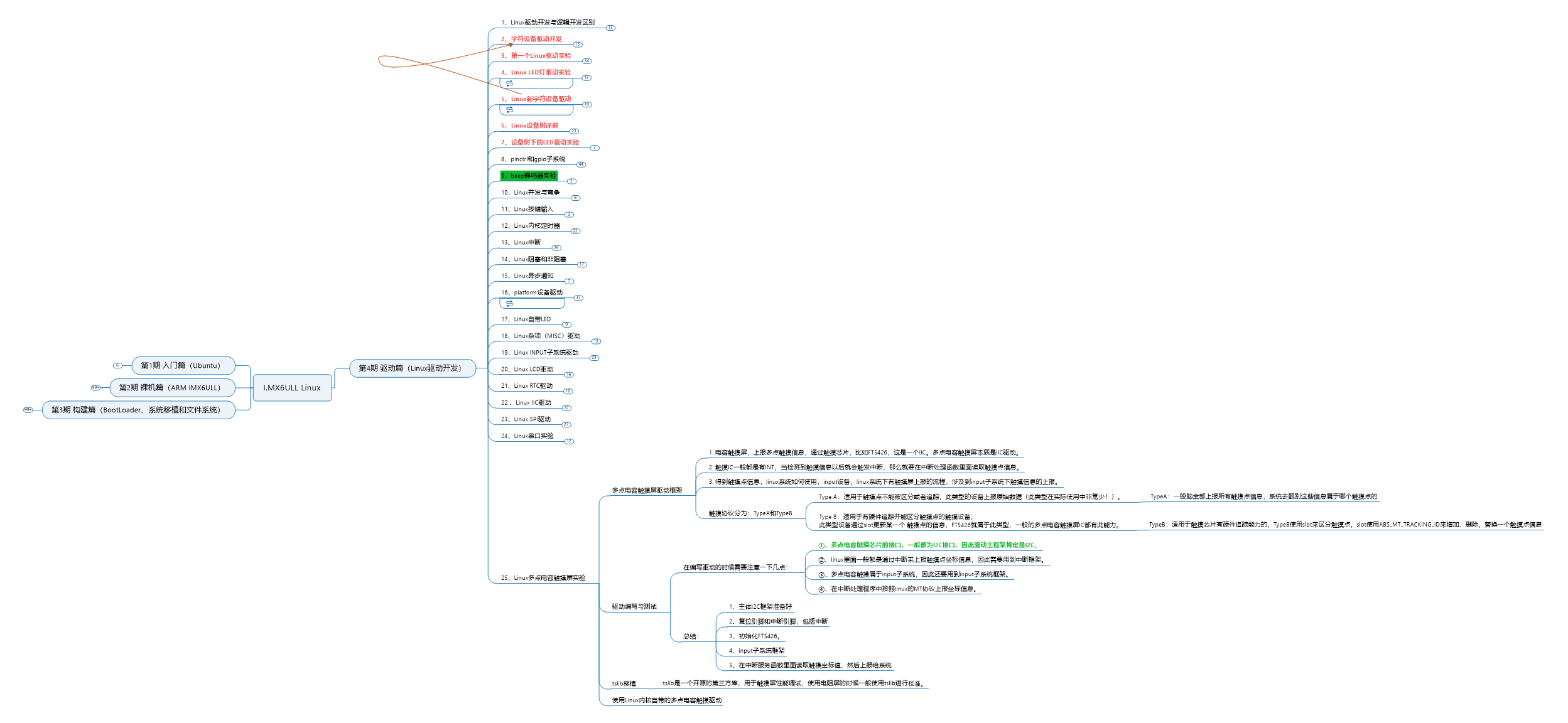

I.MX6ULL Linux 驱动篇