Opening

Mat performOpening(Mat inputImage, int morphologyElement, int morphologySize)

{

Mat outputImage, tempImage;

int morphologyType;

if(morphologyElement == 0)

morphologyType = MORPH\_RECT;

else if(morphologyElement == 1)

morphologyType = MORPH\_CROSS;

else if(morphologyElement == 2)

morphologyType = MORPH\_ELLIPSE;

// Create the structuring element for erosion

Mat element = getStructuringElement(morphologyType, Size(2\*morphologySize + 1, 2\*morphologySize + 1), Point(morphologySize, morphologySize));

// Apply morphological opening to the image using the structuring element

erode(inputImage, tempImage, element);

dilate(tempImage, outputImage, element);

// Return the output image

return outputImage;

}

Closing

Mat performClosing(Mat inputImage, int morphologyElement, int morphologySize)

{

Mat outputImage, tempImage;

int morphologyType;

if(morphologyElement == 0)

morphologyType = MORPH\_RECT;

else if(morphologyElement == 1)

morphologyType = MORPH\_CROSS;

else if(morphologyElement == 2)

morphologyType = MORPH\_ELLIPSE;

// Create the structuring element for erosion

Mat element = getStructuringElement(morphologyType, Size(2\*morphologySize + 1, 2\*morphologySize + 1), Point(morphologySize, morphologySize));

// Apply morphological opening to the image using the structuring element

dilate(inputImage, tempImage, element);

erode(tempImage, outputImage, element);

// Return the output image

return outputImage;

}

Morphological Gradient

Mat performMorphologicalGradient(Mat inputImage, int morphologyElement, int morphologySize)

{

Mat outputImage, tempImage1, tempImage2;

int morphologyType;

if(morphologyElement == 0)

morphologyType = MORPH\_RECT;

else if(morphologyElement == 1)

morphologyType = MORPH\_CROSS;

else if(morphologyElement == 2)

morphologyType = MORPH\_ELLIPSE;

// Create the structuring element for erosion

Mat element = getStructuringElement(morphologyType, Size(2\*morphologySize + 1, 2\*morphologySize + 1), Point(morphologySize, morphologySize));

// Apply morphological gradient to the image using the structuring element

dilate(inputImage, tempImage1, element);

erode(inputImage, tempImage2, element);

// Return the output image

return tempImage1 - tempImage2;

}

TopHat

Mat performTopHat(Mat inputImage, int morphologyElement, int morphologySize)

{

Mat outputImage;

int morphologyType;

if(morphologyElement == 0)

morphologyType = MORPH\_RECT;

else if(morphologyElement == 1)

morphologyType = MORPH\_CROSS;

else if(morphologyElement == 2)

morphologyType = MORPH\_ELLIPSE;

// Create the structuring element for erosion

Mat element = getStructuringElement(morphologyType, Size(2\*morphologySize + 1, 2\*morphologySize + 1), Point(morphologySize, morphologySize));

// Apply top hat operation to the image using the structuring element

outputImage = inputImage - performOpening(inputImage, morphologyElement, morphologySize);

// Return the output image

return outputImage;

}

BlackHat

Mat performBlackHat(Mat inputImage, int morphologyElement, int morphologySize)

{

Mat outputImage;

int morphologyType;

if(morphologyElement == 0)

morphologyType = MORPH\_RECT;

else if(morphologyElement == 1)

morphologyType = MORPH\_CROSS;

else if(morphologyElement == 2)

morphologyType = MORPH\_ELLIPSE;

// Create the structuring element for erosion

Mat element = getStructuringElement(morphologyType, Size(2\*morphologySize + 1, 2\*morphologySize + 1), Point(morphologySize, morphologySize));

// Apply black hat operation to the image using the structuring element

outputImage = performClosing(inputImage, morphologyElement, morphologySize) - inputImage;

// Return the output image

return outputImage;

}

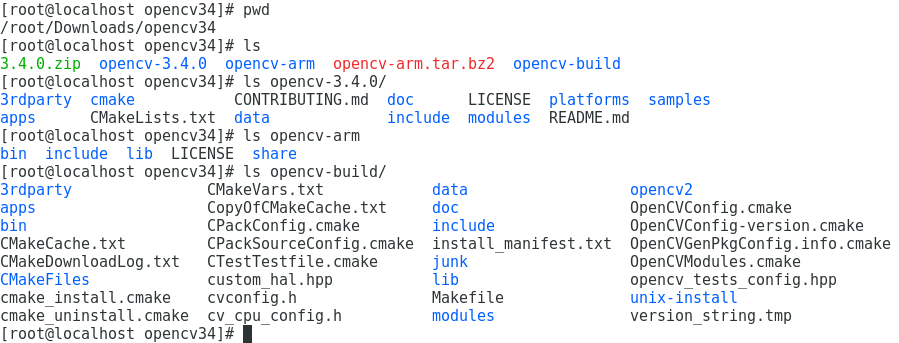

<>

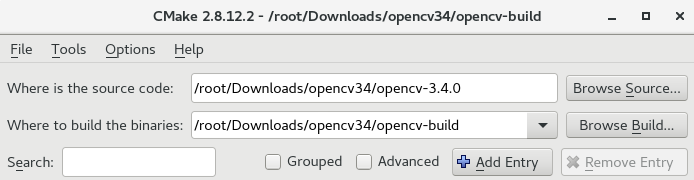

<>