[root@localhost ~]# systemctl stop firewalld.service

[root@localhost ~]# systemctl disable firewalld.service

[root@localhost ~]# firewall-cmd –state

Disable SELinux

[root@localhost ~]# sed -i ‘/^SELINUX=.*/c SELINUX=disabled’ /etc/selinux/config

[root@localhost ~]# sed -i ‘s/^SELINUXTYPE=.*/SELINUXTYPE=disabled/g’ /etc/selinux/config

[root@localhost ~]# grep –color=auto ‘^SELINUX’ /etc/selinux/config

[root@localhost ~]# setenforce 0

Synchronize time

Refer to “CLOUD COMPUTING —> OpenStack High Availability —> 3.Create Local NTP”

Set HostName

[root@localhost ~]# hostnamectl set-hostname controller1

[root@localhost ~]# hostnamectl set-hostname controller2

[root@localhost ~]# hostnamectl set-hostname controller3

[root@localhost ~]# hostnamectl set-hostname compute01

[root@localhost ~]# hostnamectl set-hostname compute02

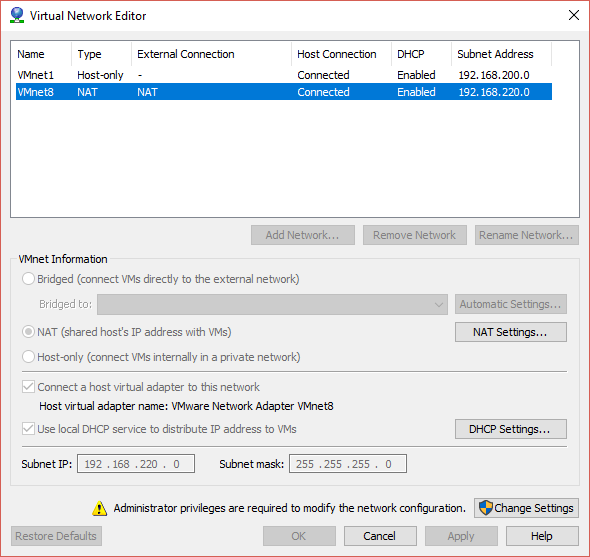

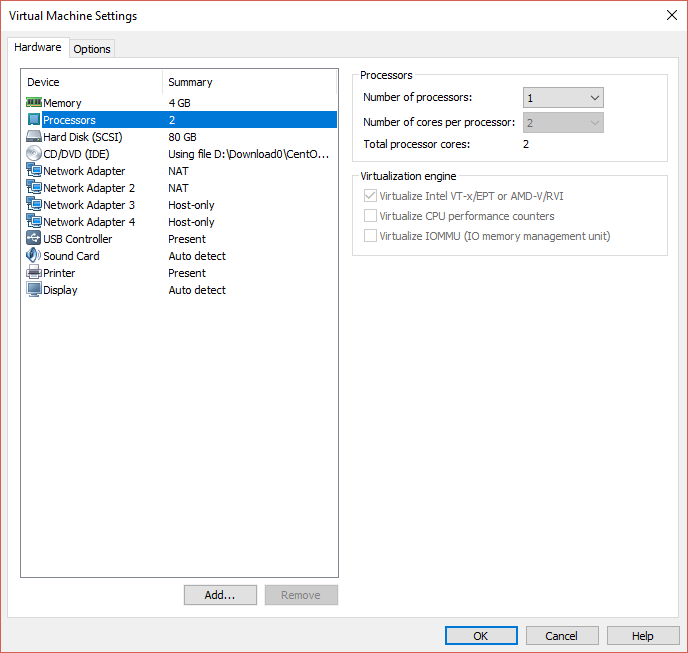

192.168.200.0Host-only 192.168.220.0NAT

Controller1

[root@controller1 ~]# ls -al /etc/sysconfig/network-scripts/ifcfg-*

-rw-r–r– 1 root root 369 Dec 13 22:22 /etc/sysconfig/network-scripts/ifcfg-ens33

-rw-r–r– 1 root root 369 Dec 13 22:23 /etc/sysconfig/network-scripts/ifcfg-ens37

-rw-r–r– 1 root root 326 Dec 13 22:26 /etc/sysconfig/network-scripts/ifcfg-ens38

-rw-r–r– 1 root root 326 Dec 13 22:28 /etc/sysconfig/network-scripts/ifcfg-ens39

-rw-r–r–. 1 root root 254 May 3 2017 /etc/sysconfig/network-scripts/ifcfg-lo

Update the 1st network adapter:

[root@controller1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=9eb00433-c8b9-4be2-af53-50cab2247226

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.220.11

NETMASK=255.255.255.0

GATEWAY=192.168.220.2

DNS1=114.114.114.114

Update the 2nd network adapter:

[root@controller1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens37

UUID=cf7a62c9-e135-4488-b252-99717664941c

DEVICE=ens37

ONBOOT=yes

IPADDR=192.168.220.21

NETMASK=255.255.255.0

GATEWAY=192.168.220.2

DNS1=114.114.114.114

Update the 3rd network adapter:

[root@controller1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens38

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens38

UUID=b077534d-3154-43ca-a240-ac811f255f42

DEVICE=ens38

ONBOOT=yes

IPADDR=192.168.200.11

NETMASK=255.255.255.0

Update the 4th network adapter:

[root@controller1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens39

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens39

UUID=ea5e0f92-8f8c-457f-a693-f946c623d0e7

DEVICE=ens39

ONBOOT=yes

IPADDR=192.168.200.21

NETMASK=255.255.255.0

Controller2

[root@controller2 ~]# ls -al /etc/sysconfig/network-scripts/ifcfg-*

-rw-r–r– 1 root root 369 Dec 13 22:22 /etc/sysconfig/network-scripts/ifcfg-ens33

-rw-r–r– 1 root root 369 Dec 13 22:23 /etc/sysconfig/network-scripts/ifcfg-ens37

-rw-r–r– 1 root root 326 Dec 13 22:26 /etc/sysconfig/network-scripts/ifcfg-ens38

-rw-r–r– 1 root root 326 Dec 13 22:28 /etc/sysconfig/network-scripts/ifcfg-ens39

-rw-r–r–. 1 root root 254 May 3 2017 /etc/sysconfig/network-scripts/ifcfg-lo

Update the 1st network adapter:

[root@controller2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=14a0d64d-bca2-4b37-ac1d-460897567fff

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.220.12

NETMASK=255.255.255.0

GATEWAY=192.168.220.2

DNS1=114.114.114.114

Update the 2nd network adapter:

[root@controller2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens37

UUID=c382316f-97d8-4e3f-a82b-b48cd6d0d025

DEVICE=ens37

ONBOOT=yes

IPADDR=192.168.220.22

NETMASK=255.255.255.0

GATEWAY=192.168.220.2

DNS1=114.114.114.114

Update the 3rd network adapter:

[root@controller2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens38

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens38

UUID=f727a6f1-0ae0-4c0d-aa54-fbaefb3570c7

DEVICE=ens38

ONBOOT=yes

IPADDR=192.168.200.12

NETMASK=255.255.255.0

Update the 4th network adapter:

[root@controller2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens39

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens39

UUID=b56c3af9-2844-47e3-9e30-ef078640b2f1

DEVICE=ens39

ONBOOT=yes

IPADDR=192.168.200.22

NETMASK=255.255.255.0

Controller3

[root@controller3 ~]# ls -al /etc/sysconfig/network-scripts/ifcfg-*

-rw-r–r– 1 root root 369 Dec 13 22:22 /etc/sysconfig/network-scripts/ifcfg-ens33

-rw-r–r– 1 root root 369 Dec 13 22:23 /etc/sysconfig/network-scripts/ifcfg-ens37

-rw-r–r– 1 root root 326 Dec 13 22:26 /etc/sysconfig/network-scripts/ifcfg-ens38

-rw-r–r– 1 root root 326 Dec 13 22:28 /etc/sysconfig/network-scripts/ifcfg-ens39

-rw-r–r–. 1 root root 254 May 3 2017 /etc/sysconfig/network-scripts/ifcfg-lo

Update the 1st network adapter:

[root@controller3 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=5ca30d63-789d-4df4-9f98-29aa74945e1b

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.220.13

NETMASK=255.255.255.0

GATEWAY=192.168.220.2

DNS1=114.114.114.114

Update the 2nd network adapter:

[root@controller3 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens37

UUID=8cb6cd8b-304f-4148-96bb-326976276d17

DEVICE=ens37

ONBOOT=yes

IPADDR=192.168.220.23

NETMASK=255.255.255.0

GATEWAY=192.168.220.2

DNS1=114.114.114.114

Update the 3rd network adapter:

[root@controller3 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens38

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens38

UUID=9d8e2ebb-ded2-4d51-8b77-072ccfc269c3

DEVICE=ens38

ONBOOT=yes

IPADDR=192.168.200.13

NETMASK=255.255.255.0

Update the 4th network adapter:

[root@controller3 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens39

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens39

UUID=639fdbe3-b6f4-4f8d-b988-b9e01ef5041e

DEVICE=ens39

ONBOOT=yes

IPADDR=192.168.200.23

NETMASK=255.255.255.0

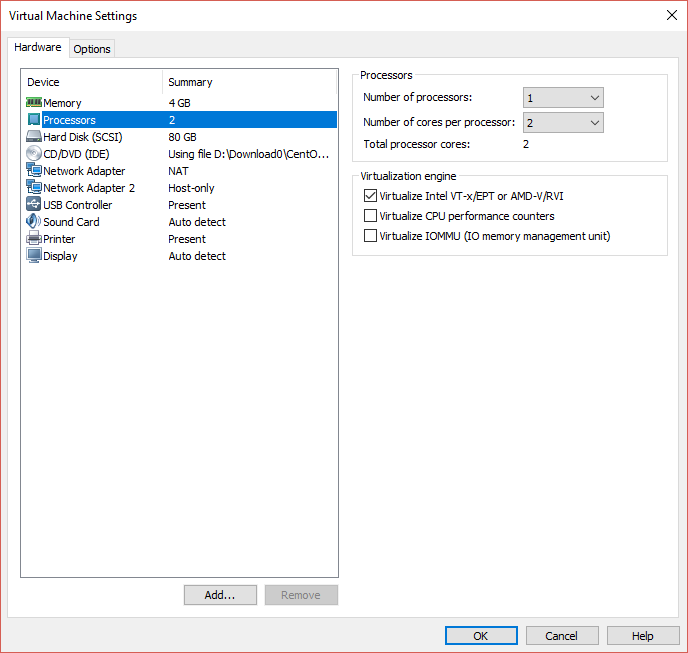

Compute01

[root@compute01 ~]# ls -al /etc/sysconfig/network-scripts/ifcfg-*

-rw-r–r– 1 root root 369 Dec 13 22:22 /etc/sysconfig/network-scripts/ifcfg-ens33

-rw-r–r– 1 root root 326 Dec 13 22:23 /etc/sysconfig/network-scripts/ifcfg-ens37

-rw-r–r–. 1 root root 254 May 3 2017 /etc/sysconfig/network-scripts/ifcfg-lo

Update the 1st network adapter:

[root@compute01 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=fdab3eb4-d1c6-46e4-a09e-f3cff2c23514

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.220.51

NETMASK=255.255.255.0

GATEWAY=192.168.220.2

DNS1=114.114.114.114

Update the 2nd network adapter:

[root@compute01 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens37

UUID=0157d35c-ced3-4c0c-a519-48cb2a11e8db

DEVICE=ens37

ONBOOT=yes

IPADDR=192.168.200.51

NETMASK=255.255.255.0

Compute02

[root@compute02 ~]# ls -al /etc/sysconfig/network-scripts/ifcfg-*

-rw-r–r– 1 root root 369 Dec 13 22:22 /etc/sysconfig/network-scripts/ifcfg-ens33

-rw-r–r– 1 root root 326 Dec 13 22:23 /etc/sysconfig/network-scripts/ifcfg-ens37

-rw-r–r–. 1 root root 254 May 3 2017 /etc/sysconfig/network-scripts/ifcfg-lo

Update the 1st network adapter:

[root@compute02 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=e8cf9148-b8dd-4879-8851-f523bb0cefd0

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.220.61

NETMAKE=255.255.255.0

GATEWAY=192.168.220.2

DNS1=114.114.114.114

Update the 2nd network adapter:

[root@compute02 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens37

UUID=308add2b-09df-495f-b706-5afbdff0ff9e

DEVICE=ens37

ONBOOT=yes

IPADDR=192.168.200.61

NETMAKE=255.255.255.0

Update HostName

[root@controller1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.220.20 controller # virtual IP

192.168.220.21 controller1

192.168.220.22 controller2

192.168.220.23 controller3

192.168.220.51 compute01

192.168.220.61 compute02

Controller SSH

[root@controller1 ~]# ssh-keygen -t rsa -P “” -f ~/.ssh/id_rsa

Generating public/private rsa key pair.

Created directory ‘/root/.ssh’.

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:RJz627PplvqRVorpdiRPXLZ15aiQD/YruvXT/Cu1sSo root@controller1

The key s randomart image is:

+—[RSA 2048]—-+

…

.o .

.. . o.

.. = o o o

.So B.+ .

oo++= o

oB*. +. +

.ooOEo.oo

.=O=+ooooo

+—-[SHA256]—–+

[root@controller1 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@controller2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: “/root/.ssh/id_rsa.pub”

The authenticity of host ‘controller2 (192.168.220.22)’ can’t be established.

ECDSA key fingerprint is SHA256:Q5vLVMvx0+FWYxqM263f4//dH72IwEgT/bWeOIlwTfY.

ECDSA key fingerprint is MD5:b5:6a:fb:3f:be:98:f7:d9:71:4e:d1:89:c4:7f:8f:00.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed – if you are prompted now it is to install the new keys

root@controller2’s password:

Number of key(s) added: 1

Now try logging into the machine, with: “ssh ‘root@controller2’”

and check to make sure that only the key(s) you wanted were added.

[root@controller1 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@controller3

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: “/root/.ssh/id_rsa.pub”

The authenticity of host ‘controller3 (192.168.220.23)’ can’t be established.

ECDSA key fingerprint is SHA256:Q5vLVMvx0+FWYxqM263f4//dH72IwEgT/bWeOIlwTfY.

ECDSA key fingerprint is MD5:b5:6a:fb:3f:be:98:f7:d9:71:4e:d1:89:c4:7f:8f:00.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed – if you are prompted now it is to install the new keys

root@controller3’s password:

Number of key(s) added: 1

Now try logging into the machine, with: “ssh ‘root@controller3’”

and check to make sure that only the key(s) you wanted were added.

[root@controller1 ~]# ssh controller2

Last login: Thu Dec 14 00:47:15 2017 from 192.168.220.1

[root@controller2 ~]# exit

logout

Connection to controller2 closed.

[root@controller1 ~]# ssh controller3

Last login: Thu Dec 14 00:47:16 2017 from 192.168.220.1

[root@controller3 ~]# exit

logout

Connection to controller3 closed.

[root@controller1 ~]#

Use Local Repository

Refer to “CLOUD COMPUTING —> OpenStack High Availability —> 2.Create Local Repository

Use Ali Mirror

[root@localrepo ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@localrepo ~]# cat /etc/yum.repos.d/CentOS-Pike.repo

[centos-openstack-pike]

name=centos-openstack-pike

baseurl=https://mirrors.aliyun.com/centos/$releasever/cloud/$basearch/openstack-pike/

gpgcheck=0

enabled=1

[centos-qemu-ev]

name=centos-qemu-ev

baseurl=https://mirrors.aliyun.com/centos/$releasever/virt/$basearch/kvm-common/

gpgcheck=0

enabled=1

Sync Repo

[root@localrepo myrepo]# reposync –repoid=centos-openstack-pike

[root@localrepo myrepo]# reposync –repoid=centos-qemu-ev

[root@localrepo myrepo]# ls -al

total 120

drwxr-xr-x. 7 root root 98 Dec 14 02:51 .

drwxr-xr-x. 3 root root 20 Dec 13 03:03 ..

drwxr-xr-x. 4 root root 38 Dec 14 02:30 base

drwxr-xr-x. 3 root root 90112 Dec 14 02:50 centos-openstack-pike

drwxr-xr-x. 2 root root 4096 Dec 14 02:51 centos-qemu-ev

drwxr-xr-x. 4 root root 38 Dec 14 02:31 extras

drwxr-xr-x. 4 root root 38 Dec 14 02:31 updates

Create Repo

[root@localrepo myrepo]# createrepo /var/www/html/myrepo/centos-openstack-pike

Spawning worker 0 with 1091 pkgs

Spawning worker 1 with 1091 pkgs

Workers Finished

Saving Primary metadata

Saving file lists metadata

Saving other metadata

Generating sqlite DBs

Sqlite DBs complete

[root@localrepo myrepo]# createrepo /var/www/html/myrepo/centos-qemu-ev/

Spawning worker 0 with 18 pkgs

Spawning worker 1 with 17 pkgs

Workers Finished

Saving Primary metadata

Saving file lists metadata

Saving other metadata

Generating sqlite DBs

Sqlite DBs complete

Add Ali Mirror On Client Node

[root@controller1 ~]# cat /etc/yum.repos.d/CentOS-Pike.repo

[centos-openstack-pike]

name=centos-openstack-pike

baseurl=http://192.168.220.200/myrepo/centos-openstack-pike/

gpgcheck=0

enabled=1

[centos-qemu-ev]

name=centos-qemu-ev

baseurl=http://192.168.220.200/myrepo/centos-qemu-ev/

gpgcheck=0

enabled=1

[root@controller1 ~]# rsync -avzP -e ‘ssh -p 22’ /etc/yum.repos.d/CentOS-Pike.repo controller2:/etc/yum.repos.d/

sending incremental file list

CentOS-Pike.repo

247 100% 0.00kB/s 0:00:00 (xfer#1, to-check=0/1)

sent 195 bytes received 31 bytes 452.00 bytes/sec

total size is 247 speedup is 1.09

[root@controller1 ~]# rsync -avzP -e ‘ssh -p 22’ /etc/yum.repos.d/CentOS-Pike.repo controller3:/etc/yum.repos.d/

sending incremental file list

CentOS-Pike.repo

247 100% 0.00kB/s 0:00:00 (xfer#1, to-check=0/1)

sent 195 bytes received 31 bytes 452.00 bytes/sec

total size is 247 speedup is 1.09

Install OpenStack Environment On All Nodes

[root@controller1 ~]# yum install centos-release-openstack-pike -y

[root@controller1 ~]# yum install python-openstackclient openstack-selinux python2-PyMySQL openstack-utils -y

[root@controller1 ~]# yum update -y